Software Testing

What is Testing ?

- Testing is the process of exercising a program with the specific intent of finding errors prior to delivery to the end user.

- It is an important activity in software development process.

Testing Strategies

There are various testing strategies defined in software engineering literature, all these strategies provide a testing template to the developers, and these template should process following characteristics :

- Formal technical reviews must be conducted by the developer team.

- Testing starts from the component level and then finally complete computer based system is integrated at the end.

- Usually for smaller projects testing is conducted by the software developer itself. For the larger projects an independent group is hired for conducting effective testing.

- Debugging should be accommodated in testing strategies.

What Testing Shows ?

- Errors.

- Requirements conformance.

- Performance.

- An indication of quality.

Verification and Validation

- Verification refers to the set of tasks that ensure that software correctly implements a specific function. It is static.

Verification: “Are we building the product right?”

- Validation refers to a different set of tasks that ensure that the software that has been built is traceable to customer requirements. It is dynamic.

Validation: “Are we building the right product?”

A Strategy for Testing Conventional Software

Levels of Testing for Conventional Software

- Unit Testing – Begins at the center and each of the unit is implemented in source code.

- Integration Testing – Focus is on design and construction of software architecture.

- Validation Testing – Where requirements are validated according to requirement analysis as narrated by the customer in requirement analysis phase

- System Testing – All system elements are tested as whole. In each turn of spiral the scope of testing is broaden and thus computer software testing is completed.

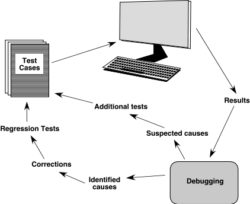

Regression Testing

- Regression testing is the re-execution of some subset of tests that have already been conducted to ensure that changes have not propagated unintended side effects.

- Whenever software is corrected, some aspect of the software configuration (the program, its documentation, or the data that support it) is changed.

- Regression testing helps to ensure that changes (due to testing or for other reasons) do not introduce unintended behavior or additional errors.

- Regression testing may be conducted manually, by re-executing a subset of all test cases or using automated capture/playback tools.

Smoke Testing

- A common approach for creating “daily builds” for product software.

- Smoke testing steps:

Software components that have been translated into code are integrated into a “build.”

A build includes all data files, libraries, reusable modules, and engineered components that are required to implement one or more product functions.

A series of tests is designed to expose errors that will keep the build from properly performing its function.

The intent should be to uncover “show stopper” errors that have the highest likelihood of throwing the software project behind schedule.

The build is integrated with other builds and the entire product (in its current form) is smoke tested daily.

Strategic Issues

- Specify product requirements in a quantifiable manner long before testing commences.

- State testing objectives explicitly.

- Understand the users of the software and develop a profile for each user category.

- Develop a testing plan that emphasizes “rapid cycle testing.”

- Build “robust” software that is designed to test itself.

- Use effective technical reviews as a filter prior to testing.

- Conduct technical reviews to assess the test strategy and test cases themselves.

- Develop a continuous improvement approach for the testing process.

Testing Strategy applied to Object-Oriented Software

- Must broaden testing to include detections of errors in analysis and design models

- Unit testing loses some of its meaning and integration testing changes significantly

- Use the same philosophy but different approach as in conventional software testing

- Test “in the small” and then work out to testing “in the large”

- Testing in the small involves class attributes and operations; the main focus is on communication and collaboration within the class

- Testing in the large involves a series of regression tests to uncover errors due to communication and collaboration among classes

- Finally, the system as a whole is tested to detect errors in fulfilling requirements

WebApp Testing

- The content model for the WebApp is reviewed to uncover errors.

- The interface model is reviewed to ensure that all use cases can be accommodated.

- The design model for the WebApp is reviewed to uncover navigation errors.

- The user interface is tested to uncover errors in presentation and/or navigation mechanics.

- Each functional component is unit tested.

- Navigation throughout the architecture is tested.

- The WebApp is implemented in a variety of different environmental configurations and is tested for compatibility with each configuration.

- Security tests are conducted in an attempt to exploit vulnerabilities in the WebApp or within its environment.

- Performance tests are conducted.

- The WebApp is tested by a controlled and monitored population of end-users. The results of their interaction with the system are evaluated for content and navigation errors, usability concerns, compatibility concerns, and WebApp reliability and performance.

High Order Testing

- Validation testing

Focus is on software requirements - System testing

Focus is on system integration - Alpha/Beta testing

Focus is on customer usage - Recovery testing

forces the software to fail in a variety of ways and verifies that recovery is properly performed - Security testing

verifies that protection mechanisms built into a system will, in fact, protect it from improper penetration - Stress testing

executes a system in a manner that demands resources in abnormal quantity, frequency, or volume - Performance Testing

test the run-time performance of software within the context of an integrated system

The Debugging Process

Debugging Techniques

- Brute force / testing

- Backtracking

- Induction

- Deduction

Correcting the Error

Is the cause of the bug reproduced in another part of the program?

In many situations, a program defect is caused by an erroneous pattern of logic that may be reproduced elsewhere.

What “next bug” might be introduced by the fix I’m about to make?

Before the correction is made, the source code (or, better, the design) should be evaluated to assess coupling of logic and data structures.

What could we have done to prevent this bug in the first place?

This question is the first step toward establishing a statistical software quality assurance approach. If you correct the process as well as the product, the bug will be removed from the current program and may be eliminated from all future programs.